CXL: The key to memory capacity in next

- Components

- 2023-09-27 10:44:56

We’re on the verge of a new era of computing that will likely see major changes to the data center, thanks to the growing dominance of artificial intelligence (AI) and machine learning (ML) applications in almost every industry. These technologies are driving massive demand for compute speed and performance. However, there are a few major memory challenges for the data center presented by advanced workloads like AI/ML as well.

These challenges come at a critical time, as AI/ML applications are growing in popularity, as is the sheer quantity of data being produced. In effect, just as the pressure for faster computing increases, the ability to meet that need through traditional means decreases.

Solving the data center memory dilemma

To continually advance computing, chipmakers have consistently added more cores per CPU—increasing rapidly over recent years from 32, 48, 96, to over 100. The challenge is that system memory bandwidth has not scaled at that same rate—leading to a bottleneck. After a certain point, all the CPUs beyond the first one or two dozen are starved of bandwidth, so the benefit of adding those additional cores is sub-optimized.

There are also practical limits imposed on memory capacity, given the finite number of DDR memory channels that you can add to the CPU. This is a critical consideration for infrastructure-as-a-service (IaaS) applications and workloads that include AI/ML and in-memory databases.

Enter Compute Express Link (CXL). With memory such a key enabler of steady computing growth, it is gratifying how the industry has coalesced around CXL as the technology to tackle these big data center memory challenges.

CXL is a new interconnect standard that has been on an aggressive development roadmap to deliver increased performance and efficiency in data centers. In 2022, the CXL Consortium released the CXL 3.0 specification, which includes new features capable of changing the way data centers are architected, boosting overall performance through enhanced scaling. CXL 3.0 pushes data rates up to 64 GT/s using PAM4 signaling to leverage PCIe 6.0 for its physical interface. In the 3.0 update, CXL offers multi-tiered (fabric-attached) switching to allow for highly scalable memory pooling and sharing.

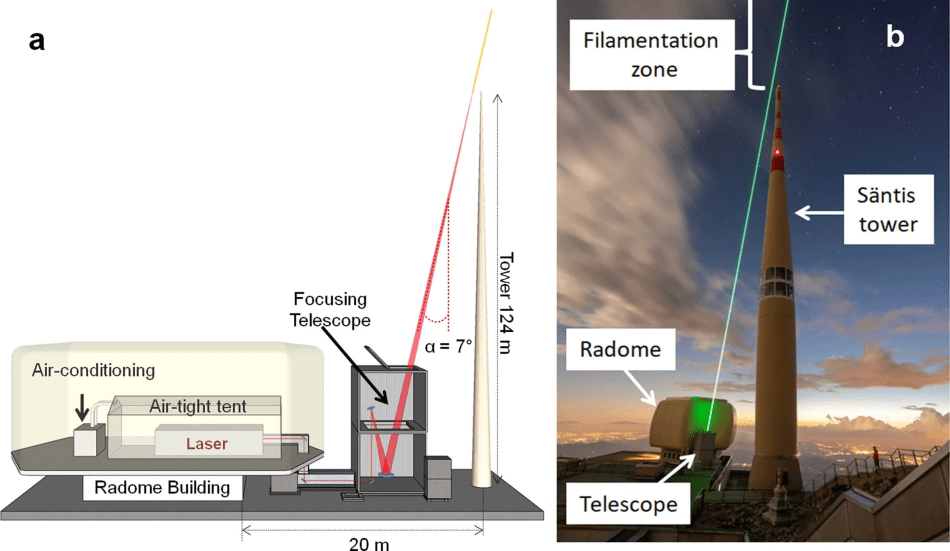

CXL’s pin-efficient architecture helps overcome the limitations of package scaling by providing more memory bandwidth and capacity. Significant amounts of additional bandwidth and capacity can then be delivered to processors—above and beyond that of the main memory channels—to feed data to the rapidly increasing number of cores in multi-core processors.

Figure 1 CXL provides memory bandwidth and capacity to processors to feed data to the rapidly increasing number of cores. Source: Rambus

Once memory bandwidth and capacity are delivered to individual CPUs, GPUs, and accelerators in a manner that provides for desired performance, efficient use of that memory must then be considered. Unless everything in a heterogenous computing system is running at maximum performance, memory resources can be left underutilized or “stranded.” With memory accounting for upward of half of server BOM costs, the ability to share memory resources in a flexible and scalable way will be key.

This is another area where CXL offers an answer. It provides a serial low latency memory cache coherent interface between computing devices and memory devices, so CPUs and accelerators can seamlessly share a common memory pool. This allows for the allocation of memory on an on-demand basis. Rather than having to provision processors and accelerators for worst-case loading, architectures that balance memory resources between direct-attached and pooled can be deployed to address the issue of memory stranding.

Finally, CXL provides for new tiers of memory with different performance characteristics without requiring changes to the CPU, GPU, or accelerator that will take advantage of them—performing in between the performance of DRAM and SSDs. Pooled or shared memory may have its own collection of memory tiers depending on performance, but by providing an open standard interface, data center architects can more generally choose the best memory type, whether they be older memory technology or memory types we haven’t even seen hit the market yet, to provide the best total cost of ownership (TCO) for the workload.

Figure 2 CXL offers multiple tiers of memory with different performance characteristics. Source: Rambus

Here, CXL helps bridge the latency gap that has existed between natively supported main memory and SSD storage.

CXL, but when?

CXL technology will help with several use models that will roll out over time. The first experimentation will be for pure memory expansion (CXL 2.0), where more bandwidth and capacity can be plugged into a server in a one-to-one relationship between a compute node and a CXL memory device.

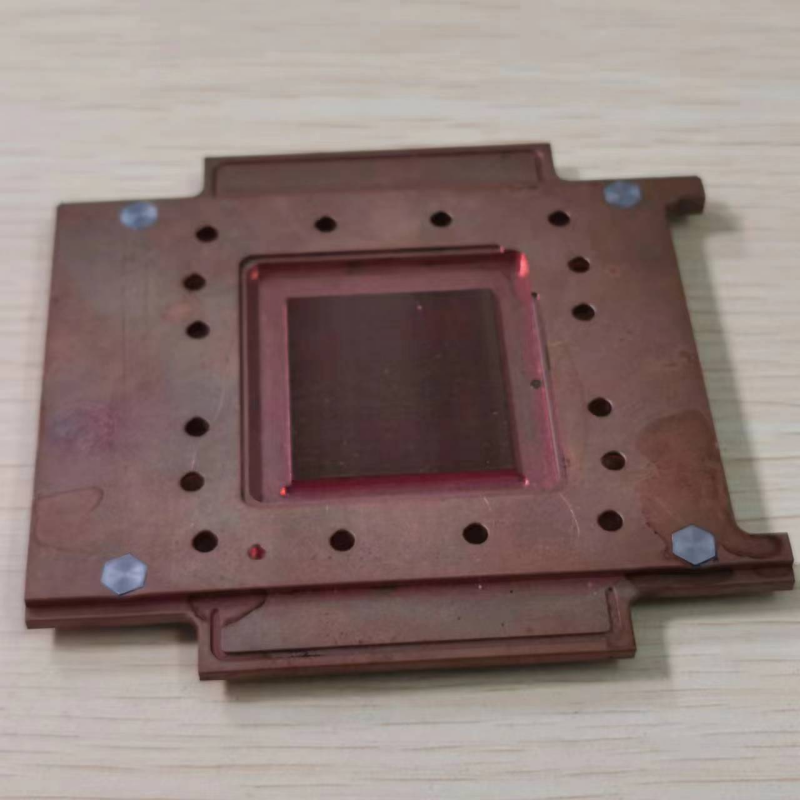

Key to implementation will be the CXL memory controller device which will manage traffic between the memory devices and CPUs and provide more flexibility by making the memory controller external to the CPU. This will enable more and varied types of memory to connect to compute elements.

The next phase of deployment will likely be CXL pooling, leveraging principles introduced with CXL 3.0, where the CXL memory devices and compute nodes are connected in a one-to-many or many-to-many fashion. Of course, there will be practical limits in terms of how many host connections you can have on a single CXL-enabled device in a direct connected deployment. To address the desire for scaling, CXL switches and fabrics come into play.

Switches and fabrics have the additional benefit of enabling peer-to-peer data transfers between heterogeneous compute elements and memory elements, freeing up CPUs from being involved in all transactions. Switch and fabric architectures will only be deployed at scale when data center architects are satisfied with the latencies and the reliability, availability, and serviceability (RAS) of the solution. This will take some time, but once the ecosystem arrives, the possibilities for disaggregated architectures will be enormous.

Once-in-a-decade technology

CXL is a once-in-a-decade technological force with the power to completely revolutionize data center architecture, and it’s gaining steam at a critical moment in computing business. CXL can enable the data center to ultimately move to a disaggregated model, where server designs and resources are far less rigidly partitioned.

The improved pin-efficiency of CXL not only means that more memory bandwidth and capacity are available for data-intensive workloads, but that memory can also be shared between computing nodes when needed. This enables pools of shared memory resources to be efficiently composed to meet the needs of specific workloads.

The technology is now supported by a large ecosystem of over 150 industry players, including hyperscalers, system OEMs, platform and module makers, chipmakers, and IP providers, which, in turn, furthers CXL’s potential. While it’s still in the early stages of deployment, the CXL Consortium’s release of the 3.0 specification emphasizes the technology’s momentum and showcases its potential to unleash a new era of computing.

Mark Orthodoxou is VP of strategic marketing for interconnect SoCs at Rambus.

Related Content

‘CXL’ May Well Be How Data Centers Spell ReliefSNIA Spec Gets Data Moving in CXL EnvironmentWhat CXL 3.0 specification means for data centersCXL Spec Grows, Absorbs Others to Collate EcosystemCXL initiative tackles memory challenges in heterogeneous computingCXL: The key to memory capacity in next由Voice of the EngineerComponentsColumn releasethank you for your recognition of Voice of the Engineer and for our original works As well as the favor of the article, you are very welcome to share it on your personal website or circle of friends, but please indicate the source of the article when reprinting it.“CXL: The key to memory capacity in next”